TOC is an extension of MCS , and as such extends on the topics originally covered in MCS, such as:

- Inductive proofs and definitions (also covered in ICM)

- Finite automata

- Kleene's Theorem

- Non-deterministic finite automata

- Grammars

- Regular grammars

- Context-free grammars

- Pushdown automata

- Chomsky hierarchy

| Level | Grammars/Languages | Grammar Productions | Machines |

|---|---|---|---|

| 0 | Unrestricted/recursively enumerable | α → β [α ∈ (V ∪ Σ)+, β ∈ (V ∪ Σ)*] | Turing Machine |

| 1 | Context-sensitive | α → β [α, β ∈ (V ∪ Σ)+, |α| ≤ |β|] | Linear-bounded automaton |

| 2 | Context-free | A → β [A ∈ V, β ∈ (V ∪ Σ)*] | Pushdown automaton |

| 3 | Regular | A → a, A → aB, A → Λ [A, B ∈ V, a ∈ Σ] | Finite automaton |

We could also consider a level 2.5, which is a deterministic context free grammars.

Context-Sensitive Languages

A grammar is context sensitive if α → β satisfies |α| ≤ |β|, with the possible exception of S → Λ. If S → Λ is present, then S must not occur in any right-hand side. The length restrictions here mean that you can generate all strings in a language up to a certain length (derivation trees).

Context-sensitive grammars generate context-sensitive languages, and every context free language is context sensitive.

Linear Bounded Automata

A linear bounded automata (LBA) is defined like a nondeterministic Turing machine (see next section), M, except that the initial config for input w has the form (q0, <w>) and the tape head can only move between < and >.

An equivalent definition allows inital configurations of the form (q0, <wΔn>), where n = cM|w|, for some constant cM ≥ 0. This allows to have multi-track machines, where cM represents the number of tracks.

Theorem: A language L is context sensitive if and only if L is accepted by some LBA.

Open research problem: Are deterministic linear bound automata as powerful as non-deterministic LBAs?

Turing Machines

Turing machines were introduced by Alan Turing in "On Computable Numbers with an Application to the Entscheidungsproblem" (The Entscheidungsproblem is whether or not a procedure of algorithm exists for a general problem).

A Turing Machine is an automata with consits of a 5-tuple (Q, Σ, Γ, q0, δ)

- Q is a finite set of states including ha and hr (the halting accept and reject states)

- Σ - the alphabet of input symbols

- Γ - the alphabet of tape symbols, including the blank symbol Δ, such that Σ ⊆ Γ - {Δ}

- q0 - the initial state, such that q0 ∈ Q

- δ - the transition function such that δ : (Q - {ha, hr}) × Γ → Q × Γ × {L, R, S}

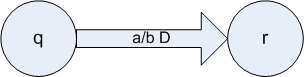

Intuitively, a Turing machine has a potentially infinite set of squares and a head moving on a type when reading character a whilst in state q, δ(q, a) = (r, b, D), which denotes a new state r, a is overwritten with b and the head either moves to the left (D = L), right (D = R), or remains stationary (D = S).

Configurations and Transitions

A configuration is a pair (q, uav) where q ∈ Q, a ∈ Γ and u, v ∈ Γ*. This configuration is considered to be the same as (q, uavΔ) - the rightmost blanks can be ignored.

A transition (q, uav) ├ (r, xby) describes a single move of a Turing machine, and we can write (q, uav) ├* (r, xby) for a sequence of transitions. This can be represented diagramatically as:

Convention also dictates that if there is no transition for what you want to do, a transition to hr is implied.

There are also special case transitions we can use:

- The initial configuration for input w ∈ Σ* is (q0, Δw)

- Configuration (q, xay) is a halting configuration if q = ha or q = hr, an accepting configuration if q = ha and a rejecting configuration if q = hr.

- Tape extension at the right end: (q, va) ├ (r, vbΔ) if δ(q, a) = (r, b, R) for some r ∈ Q and b ∈ Γ.

- A crash occurs at the left end of the tape: (q, av) ├ (hr, bv) if δ(q, a) = (r, b, L) for some r ∈ Q and b ∈ Γ.

The language accepted by the Turing machine M is L(M) = {w ∈ Σ* | (q0, Δw) ├* (ha, xay) for some x, y ∈ Γ* and a ∈ Γ}

Non-deterministic Turing Machines

A non-deterministic Turing machine (NTM) varies from the Turing machine in the transition function. δ: (Q - {ha, hr}) × Γ → 2Q × Γ × {L, R, S}

Theorem: For every NTM N, there exists a TM M such that L(M) = L(N)

Multi-tape Turing Machines

Intuitively, this is a machine with n tapes on which the machine works simultaneously. This is defined like the Turing machine, which has a revised definition of the transition function. δ: (Q - {ha, hr}) × Γn × {L, R, S}n, where n ≥ 2.

Configurations on a multi-tape machine have the form (q, u1a1v1, u2a2v2, ..., unanvn), and the intial configuration for an input w is (q0, Δw, Δ, ..., Δ), by convention.

Theorem: For every multi-tape machine N, there exists a TM M such that L(N) = L(M).

Recursively Enumerable

A language L is recursively enumerable if there exists a Turing machine that accepts L.

Theorem: A language L is recursively enumerable if and only if L is generated by some (unrestricted) grammar.

Enumerating Languages

A multitape TM enumerates L if:

- the computation begins with all tapes blank

- the tape head on tape 1 never moves left

- at each point in the computation, the contents of tape 1 has the form Δ#w1#w2#...#wnv, where n ≥ 0, w1 ∈ L, # ∈ (Γ - Σ) and v ∈ Σ*

- Every w ∈ L will eventually appear as one of the strings w on tape 1.

Theorem: A language L is recursively enumerable if and only if L is enumerated by some multitape Turing machine.

Computability

Decidability

A language L is decidable (or recursive) if it is accepted by some Turing machine M, that on every inputs eventually reaches a halting state (ha or hr), i.e., it does not get stuck and loop. We say that M decides L.

To decide whether w ∈ Σ* is in L, we run M on w until M reaches a halting configuration, then w ∈ L if and only if M is in state ha.

Theorem:

- Every context sensitive language is decidable - however as the converse is not true, we can consider decidable languages as level 0.5 on the Chomsky hierarchy.

- If a language L is decidable, then the complement = Σ* - L is also decidable

- If both L and are recursively enumerable, then L is decidable

Proof of Theorem 2

Let M be a Turing machine that accepts L and reaches a halting config on every input (is decidable). Modify M to machine as follows.

- Swap ha and hr by defining = hr and = ha.

- Put a new symbol at the start of the string (to detect the crash condition)

- Shift existing string one position to the right

- Put a special symbol (e.g., #) at the first square

- Move tape head to the second square (the old first square)

- Continue as for a normal TM

- δ is extended as (q, #) = (, #, S) ∀ q ∈ Q.

Therefore, L() = , and is decidable, as neither of the modifications described above can introduce looping.

Proof of Theorem 3

Intuitively, L(M) = L and L() = . For a string w, w ∈ L ∨ w ∈ - so at least one of those machines will accept the string. If the two machines are run in parallel and terminate as soon as one reaches an accept state, then it is impossible to get into a loop. This can be accomplished on a two-tape machine. The string is copied to tape 2 in the first instance, and then M is run on tape 1 and is run on tape 2.

We can express this formally as: Let M = (Q, Σ, Γ, q0, δ) and = (, Σ, , , ) be Turing machines that accept L and respectively. Without a loss of generality, we can assume that M and cannot crash at the left end of the tape.

We can construct a two-tape Turing machine M′ that decides L by copying its input to tape 2 and simulating M and in parallel.

- Let Q′ ⊇ Q × (we need additional states for initial copying, which are Q′ - (Q × )).

- Let δ′((q, ), (x, )) = ((r, ), (y, ), (D, )), where δ(q, x) = (r, y, D) and (, ) = (, , ). Also, we need to define:

- δ′((ha, ), (x, )) = (ha′, (x, ), (S, S)) ∀ ∈ , x ∈ Γ, ∈

- δ′((hr, ), (x, )) = (hr′, (x, ), (S, S)) ∀ ∈ , x ∈ Γ, ∈

- δ′((q, ), (x, )) = (hr′, (x, ), (S, S)) ∀ q ∈ Q, x ∈ Γ, ∈

- δ′((q, ), (x, )) = (ha′, (x, ), (S, S)) ∀ q ∈ Q, x ∈ Γ, ∈

To show that M′ decides L, we can consider two cases:

- w ∈ L. Then, on input w, M will reach ha. will either reach state , or it will loop. In both cases, M′ reaches state ha.

- w ∉ L, or w ∈ and on input w will reach accept state . M will either terminate in state hr, or will loop. In both cases, M′ reaches state hr′

Thus, L(M′) = L and M′ reaches a halting configuration on every input. We can transform M′ into a one-tape Turing machine M″ that accepts L and halts on the same inputs as M′. Thus, L is decidable.

Encoding Turing Machines

For whatever Turing machine we encounter, we want to encode the Turing machine into a string that represents the Turing machine. If we let Q = {q1, q2, ...} and S = {a1, a2, ...}, such that for every Turing machine (Q, Σ, Γ, q, δ), Q ⊆ Q and Γ ⊆ S, then we can define:

- s(Δ) = 0

- s(ai) = 0i + 1, for i ≥ 1

- s(ha) = 0

- s(hr) = 00

- s(qi) = 0i + 2

- s(S) = 0

- s(L) = 00

- s(R) = 000

If we let M = (Q, Σ, Γ, q*, δ), then we can let a transition t: (q, a) → (q′, b, D) is encoded as e(t) = s(q)1s(a)1s(q′)1s(b)1s(D)1, and e(M) is therefore s(ai1)1s(ai2)1...s(ain)11s(q*)1e(t1)e(t2)1...e(tk)1, where Σ = {ai1, ..., ain} and δ = {t1, tk}. Also, for x = x1x2...xk ∈ Sk, e(x) = 1s(x1)1s(x2)1...s(xk)1

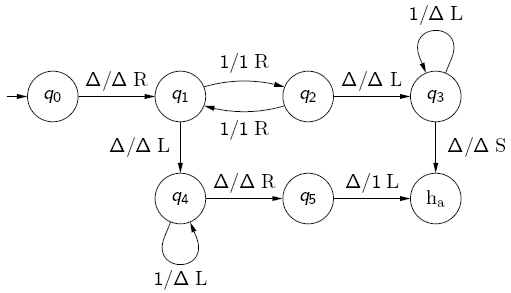

For example, for the following Turing machine, where Σ = {a, b} and Γ = {a, b, Δ}:

Suppose q = q1, a = a1 and b = a2, then we can encode this as e(M) = 00100011000100010100010100011..., which can be broken down as:

| 001 | 00011 | 0001 | 0001 | 01 | 0001 | 01 | 00011 |

| s(a) | s(b) | s(q) | s(q) | s(Δ) | s(q) | s(Δ) | s(R) |

| input alphabet | initial state | transitions | |||||

Tape symbols are states are implicit in the transitions, and therefore do not need to be explicitly defined. With this, you can feed the encoding machine into another machine (this is a similar concept to how compilers work).

The Self-Accepting Language

You can define the self-accepting language SA ⊆ {0, 1}* by SA = {w | w = e(M) for some Turing machine M and w ∈ L(M)}. This is basically saying that w is a code of a TM that accepts its own encoding. As such, we can define the completment of SA, = NSA = {w ∈ {0, 1}* | w ≠ e(M) ∀ Turing machines M} ∪ {w ∈ {0, 1}* | w = e(M) for some Turing machine M and w ∉ L(M)} - that is, a bit string that does not represent a TM, and a TM that doesn't accept itself.

We have two theorems about the self-accepting language:

- SA is recursively enumerable, but not decidable

- NSA is not recursively enumerable

Proof of Theorem 2

This is done by contradiction (indirect proof), or reducio ad absurdum and relies on the principle of tertium non dafur. If we suppose that NSA is recursively enumerable, then there is a Turing machine that accepts NSA (for Turing machine M, L(M) = NSA). If we consider the code e(M), then we have two cases:

- e(M) ∈ L(M). Then, by assumption, e(M) ∈ NSA. But then, by definition of NSA, e(M) ∉ L(M). This is obviously a contradiction.

- e(M) ∉ L(M). Then, by definition of NSA, e(M) ∈ NSA, hence by assumption e(M) ∈ L(M). This is again a contradiction.

It therefore follows that our assumption must be wrong. That is, NSA is not recursively enumerable.

The corollary of this is that SA is not decidable, and the proof of this is by definition of a decidable languages - "If a language is decidable, is also decidable". As NSA is not r.e., it is not decidable, therefore SA is not decidable.

Proof of Theorem 1

The corollary of the proof from theorem 2 is that SA is not decidable, and the proof of this is by definition of a decidable languages - "If a language is decidable, is also decidable". As NSA is not r.e., it is not decidable, therefore SA is not decidable.

We also need to consider the theorem that SA is recursively enumerable. To prove this, we need to consider a Turing machine TSA accepts SA by working as follows:

TSA first checks that its input w is the code of some Turing machine M (i.e., w = e(M)) and then Σ(M) includes {0, 1}. if this is not the case, TSA rejects w. Otherwise, TSA computes e(w) = e(e(M)) and runs U (the universal Turing machine) on e(M)e(w). It follows that TSA accepts w if and only if M accepts w. Hence L(TSA) = SA.

The Universal Turing Machine

The universal Turing machine is basically a Turing machine interpreter. It takes inputs of the form e(M)e(w), where M is a Turing machine and w is a string over Σ(M)*. The universal Turing machine will run machine M on input w, and accepts e(M)e(w) if w ∈ L(M) (M accepts w), rejects e(M)e(w) if w ∉ L(M) (M rejects w) and e(M)e(w) loops when M loops on w.

Most Languages are not Recursively Enumerable

A set S is countably infinite is there exists a bijective function N → S and is countable if it is countably infinite or finite. e.g., countable sets, N, Z, N × N and uncountable sets: R, 2N

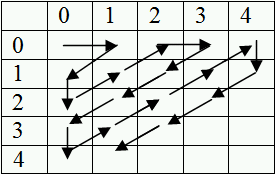

Cantor said that if you plot a matrix of pairs of numbers, and move diagonally you can represent any number with a bijective function, so pairs of numbers are countably infinite, as long as the base number is.

Lemmas:

- If S0, S1, ... are countable, then S = ∪∞i = 0Si is countable

- If S is an infinite set, then its power set 2S is uncountable

- If S is uncountable, and T is a countable subset of S, then S - T is uncountable

Proof of lemma 2: Let S be countably infinite, as otherwise 2S is obviously uncountable (there are uncountably many singleton subsets). We proceed by contradiction. Suppose 2S is countably infinite, then there is an infinite listing S0, S1, ... of the elements of 2S (i.e., of all the subsets of S). Since S is countably infinite, there is a bijection ƒ: N → S. We can define some subset of S as S′ by S′ = {ƒ(i) | i ≥ 0 ^ ƒ(i) ∉ Si}. Since S′ ⊆ S, there is some n such that S′ = Sn. We can consider two cases:

- ƒ(n) ∈ Sn, then ƒ(n) ∉ S′ = Sn, which is a contradiction

- ƒ(n) ∉ Sn, then ƒ(n) ∈ S′ = Sn, which is again a contradiction.

Theorem: For every nonempty alphabet Σ, there are uncountably many languages over Σ that are not recursively enumerable.

Proof: Every recursively enumerable language is accepted by some Turing machine. Since Turing machines can be encoded as strings over {0, 1} and {0, 1}* is countably infinite, the set of all Turing machines over Σ is countable as well (every subset of a countable set is countable). Hence, the set of all recusively enumerable languages over Σ is countable too. But, 2Σ*, the set of all languages over Σ is uncountable by lemma 2, thus by lemma 3: 2Σ* - {L ⊆ Σ* | L is r.e.} is uncountable as well.

Turing-computable Functions

A Turing machine M = (Q, Σ, Γ, q0, δ) computes the partial function ƒm(x) = {y if (q0, Δx) ├* (ha, Δy), undefined otherwise} - which says that ƒm(x) = the resulting string on the tape if it halts, otherwise it is undefined. We can generally change this unary function into a k-ary function by saying that M computes for every k ≥ 1. The k-ary partial function ƒm : (Σ*)k → Σ* is defined by ƒm(x1, ..., xk) = {y, if (q0, Δx1Δs2...Δxk) ├* (ha, Δy), undefined otherwise}.

A partial function ƒ : (Σ*)k → Σ* is Turing computable if there exists a Turing machine M such that ƒm = ƒ

Computing Numeric Functions

A partial function ƒ : Nk → N on natural numbers is computable if the corresponding function ({1}*)k → {1}* is computable, where each n ∈ N is represented by 1n.

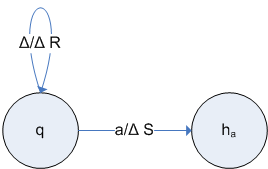

e.g., you could have a Turing machine computing ƒ : N → N with ƒ(x) = if x is even then 1 else 0, which could be represented as

Characteristic Function of a Language

For every alphabet Σ, fix to distinct strings w1, w2 ∈ Σ*. The characteristic function of a language L ⊆ Σ* is the total function XL : Σ* → Σ*, defined by XL = {w1 if x ∈ L, w2 otherwise.

Theorem: A language is decidable if and only if its characteristic function XL is computable.

Graph of a Partial Function

The graph of a partial function ƒ : Σ* → Σ* is the set, Graph(ƒ) = {(v, w) ∈ Σ* × Σ* | ƒ(v) = w}. This can be turned into a language over Σ ∪ {#}: Graph#(ƒ) = {v#w | (v, w) ∈ Graph(ƒ)}

We can have two theorems from this:

- A partial function ƒ : Σ* → Σ* is computable if and only if Graph#(ƒ) is recursively enumerable.

- A total function ƒ : Σ* → Σ* is computable if and only if Graph#(ƒ) is decidable.

Decision Problems

A decision problem is a set of questions, each of which has the answer yes or no.

We can say that these questions are instances of the decision problem; depending on their answers, they are either 'yes'-instances or 'no'-instances. To solve decision problems by Turing machines, instances are encoded as strings.

A decision problem P is decidable, or solvable, if its language of encoded 'yes'-instances is decidable. Otherwise, P is undecidable or unsolvable.

A decision problem P is semi-decidable if its language of encoded 'yes'-instances is recursively enumerable.

The Membership Problem for Regular Languages

Input: A regular expression r and w ∈ Σ*

Question: Is w ∈ L(r)

Problem: {(r, w) | r is a regular expression, w ∈ Σ*}

'Yes'-instances: {(r, w) | r is a regular expression and w ∈ L(r)

Encoded 'Yes'-instances: {encode(r)encode(w) | r is a regular expression and w ∈ L(r)}

This problem is decidable, as it can be turned into an automata.

The Self-Accepting Problem

Input: A Turing machine M

Question: Does M accept e(M)?

Problem: {M | M is a Turing machine}

'Yes'-instances: {M | M is a Turing machine and e(M) ∈ L(M)}

Encoded 'Yes'-instances: {e(M) | M is a Turing machine and e(M) ∈ L(M)}

The encoded 'Yes'-instances is the language SA, which is recursively enumerable (L(SA) can be enumerated), but not decidable, therefore this problem is semi-decidable.

The Membership Problem for Recursively Enumerable Languages

Input: A Turing machine M and w ∈ Σ*

Question: Is w ∈ L(M)?

Problem: {(M, w) | M is a Turing machine and w ∈ Σ*}

'Yes'-instances: {(M, w) | M is a Turing machine and w ∈ L(M)}

Encoded 'Yes'-instances: {e(M)e(w) | M is a Turing machine and w ∈ L(M)}

The encoded 'Yes'-instances is MP, the membership problem.

Theorem: The membership problem for recursively enumerable languages is undecidable, but semi-decidable.

Proof: We reduce the self accepting problem (SA) to the membership problem for recursively enumerable languages (MP). That is, we show that if MP is decidable, then SA is also decidable. Since SA was proved to be undecidable, MP must be undecidable too.

Suppose that MP is decidable. Then L(MP) is decidable, i.e., there is a Turing machine T that terminates for all inputs and accepts MP. We can construct a Turing machine T′ that decides SA as follows: T′ transforms its input e(M) into e(M)e(e(M)) (where e(M) = w and is placed in e(M)e(w)). T then starts on this string. This can be represented diagramatically:

T′ terminates for all inputs because T always terminates. Moreover, T′ accepts e(M) if and only if T accepts e(M)e(e(M)), that is, T′ accepts e(M) if and only if e(M) ∈ L(M).

Hence, T′ decides SA, therefore, if we can solve MP, we can solve SA. But, as SA was proved to be undecidable, this is a contradiction. Hence, our assumption that MP is decidable is false, i.e., MP is undecidable.

We can summarise this as: reduction of SA to MP - "If we can solve MP, we can also solve SA" ⇔ "SA is not more difficult than MP" ⇔ "MP is at least as difficult as SA"

Reducing Languages and Decision Problems

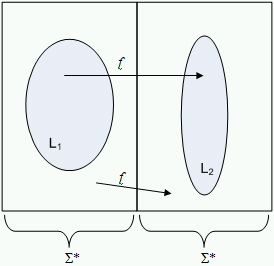

Let L1, L2 ⊆ Σ* be languages. L1 is reducible to L2 if there is a computable total function (i.e., there exists a Turing machine) ƒ : Σ* → Σ* such that for all x ∈ Σ*, x ∈ L1 if and only if ƒ(x) ∈ L2.

Let P1, P2 be decision problems and e(P1), e(P2) be the associated languages of the encoded 'Yes'-instances. We say that P1 is reducible to P2 if e(P1) is reducible to e(P2).

Theorem 1: Let L1, L2 be languages such that L1 is reducible to L2. If L2 is decidable, so is L1.

Theorem 2: Let P1, P2 be decision problems such that P1 is reducible to P2. If P2 is decidable, so is P1.

This diagram shows that the strings in L1 are mapped directly to strings in L2, and strings in are mapped to strings in .

L1 = SA = {e(M) | M is a Turing machine and e(M) ∈ L(M)} and L2 = MP = {e(M)e(w) | M is a Turing machine and w ∈ w ∈ L(M)}. ƒ : {0, 1}* → {0, 1}* with ƒ(x) = {xe(x) if x = e(M) for some Turing machine M, Λ otherwise.

Halting Problem

Input: A Turing machine M and a string w

Question: Does M reach a halting configuration on input w?

Theorem: The halting problem is undecidable, but semi-decidable

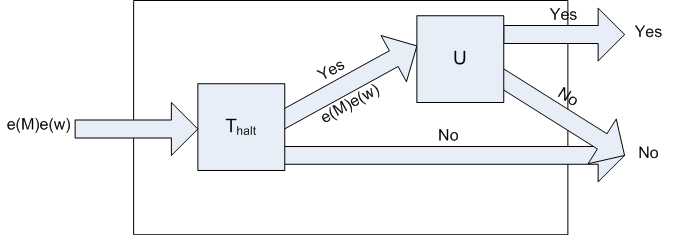

We reduce the membership problem for recursively enumerable languages (MP) to the halting problem (HP). Suppose that HP is decidable, then there is a TM Thalt that terminates on all inputs and accepts an input e(M)e(w) if and only if M reaches a halting configuration (terminates on input w).

We can construct a Turing machine T′ diagramatically as follows:

Note that if Thalt outputs 'yes' then U will terminate on e(M)e(w) with either Yes or No. Hence, T′ solves MP. Since MP is undecidable, our assumption that HP is decidable must be false.

Accept#

Theorem: The following problem (Accept#) is undecidable.

Input: A Turing machine M such that # ∈ Σ

Question: Is # ∈ L(M)

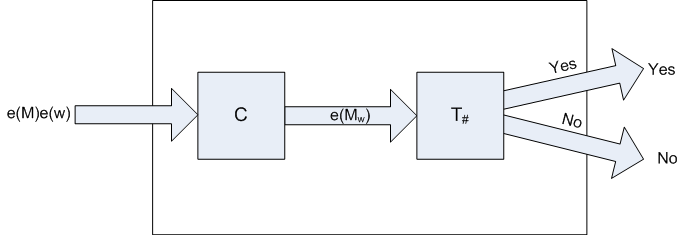

Proof: We reduce MP to Accept#. Suppose Accept# is decidable, then there is a Turing machine that halts on all inputs, accepting input e(M) if and only if # ∈ L(M). We can construct a Turing machine T′ as follows:

Where C is a Turing machine that transforms e(M)e(w) into the output e(Mw), where Mw is a Turing machine that ignores its input and runs M on input w. Now, T′ solves MP. We can consider two cases:

Case 1: w ∈ L(M). Then, by construction of Mw, Mw accepts every input and hence # ∈ L(Mw), thus T# accepts e(Mw) and T′ accepts e(M)e(w).

Case 2: w ∉ L(M). Then, by construction of Mw, Mw accepts no input and hence # ∉ L(Mw), this T# rejects e(Mw) and T′ rejects e(M)e(w).

Since MP is undecidable, Accept# must be undecidable as well.

More Undecidable Problems

These can all be proved by reduction.

- Input: A Turing machine M.

Question: Does M accept no input ("Is L(M) = ∅")? - Input: Two Turing machines M1 and M2.

Question: Do M1 and M2 accept the same inputs ("Is L(M1) = L(M2)")? - Input: A Turing machine M

Question: Is L(M) finite? - Input: A Turing machine M

Question: Is L(M) regular? - Input: A Turing machine M

Question: Is L(M) decidable?

Rice's Theorem (1953)

Let C be a proper, non-empty subset of all recursively enumerable languages. Then, the following problem is undecidable:

Input: A Turing machine M

Question: Is L(M) ∈ C?

In other words, every nontrivial property of recursively enumerable languages is undecidable. A property is nontrivial if it is satisfied by a proper, nonempty subset C of the class of all recursively enumerable languages. e.g., for the problem "Is L(M) finite?" C = {L = Σ* | L is finite}

Beware, Rice's theorem is about the languages accepted by Turing machines, not about the machines themselves.

Undecidable Problems About Context Free Grammars

Input: A context free grammar G

Question: Is L(G) = Σ*

Input: Two context free grammars G1 and G2

Question: Is L(G1) = L(G2)

Input: Two context free grammars G1 and G2

Question: Is L(G1) ∩ L(G2) = ∅?

Input: A context-free grammar G

Question: Is G ambiguous?

Input: A context-free grammar G

Question: Is L(G) inherently ambiguous?

In all the cases above, apart from the question 'Is G ambiguous?', the CFGs can be replaced by pushdown automata. However, in the case of 'Is G ambiguous?', this refers to a property of a grammar, not a language, so a pushdown automata can not be substituted in.

The Church-Turing Thesis

The Church-Turing thesis was proposed by Alonzo Church and Alan Turing in 1936 and is the statement that "Every effective procedure can be carried out by a Turing machine".

A functional version of this statement is that every partial function computable by an effective procedure is Turing-computable, and for decision problems, every decision problem solvable by an effective procedure is decidable.

This is only a thesis as an effective procedure (algorithm) can not be defined.

Complexity

(By convention when dealing with complexity, we only consider Turing machines, both deterministic and nondeterministic of which all computations eventually halt).

The time complexity of a Turing machine M is function τM : N → N, where τM(n) is the maximum number of transitions M can make on any input of length n. For a nondeterministic Turing machine N, τN(n) is the maximum number of transitions N can make on any input of length n by employing any choice of transitions. The time complexity of (possibly nondeterministic) multitape machines is defined analogously, and we define the time complexity as above as the worst-case complexity.

The space complexity of a Turing machine M is the function sM : N → N, where sM(n) is the maximum number of tape squares M visits on any input of length n. For a nondeterministic Turing machine N, sN(n) is the maximum number of tape squares N visits on any input of length n by employing any choice of transitions. For multitape Turing machines, the maximum number of tape squares refers to the maximum of the numbers for the individual tapes (which differs only be a constant factor from the maximal number of squares visited on all tapes altogether).

For every Turing machine M, sM(n) ≤ τM(n) + 1, as it is impossible for more squares to be visited than transitions made.

Growth Rate of Functions

Given a function f, g : N → N, f is of order g, written as f = Ο(g), or f ∈ Ο(g), if there is c, n0 such that ƒ(n) ≤ c . g(n) ∀ n ≥ n0.

Theorem, for a, b, r ∈ N with a, b > 1

- loga(n) = Ο(n) but n ≠ Ο(loga(n))

- nr = Ο(bn) but bn ≠ Ο(nr)

- bn = Ο(n!) but n! ≠ Ο(bn)

Using this 'Big-O' notation, we can create a hierarchy of complexity:

| Name | Ο |

|---|---|

| Constant | 1 |

| Logarithmic | log n |

| Linear | n |

| Log-linear | n log n |

| Quadratic | n2 |

| Cubic | n3 |

| Polynomial | np |

| Exponential | bn |

| Factorial | ∞ |

The table below shows examples of different growth rates for different values of n.

| log2(n) | n | n2 | n3 | 2n | n! |

|---|---|---|---|---|---|

| 2 | 5 | 25 | 125 | 32 | 120 |

| 3 | 10 | 100 | 1000 | 1024 | 3628800 |

| 4 | 20 | 400 | 8000 | 1048576 | 2.4 × 1014 |

| 5 | 50 | 2500 | 125000 | 1.1 × 1015 | 3.0 × 1064 |

| 6 | 100 | 10000 | 1000000 | 1.2 × 1030 | > 10157 |

Properties of Time Complexity

Theorem - multiple tapes vs. one tape: For every k-tape Turing machine M there is Turing machine M′ such that L(M′) = L(M) and τM′ = Ο(τM2).

Theorem - there are aribtrarily complex languages. Let ƒ be a total computable function. Then there is a decidable language L such that for every Turing machine M accepting L, τM is not bounded by ƒ.

Theorem - there need not exist a best Turing machine. There is a decidable language L such that for every Turing machine M accepting L, there is a Turing machine M′ such that L(M′) = L(M) and τM′ = Ο(log(τM)).

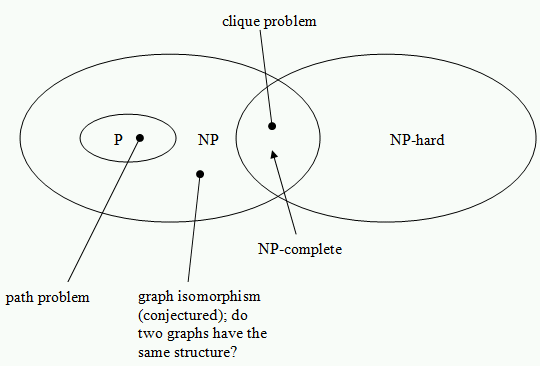

The Classes P and PSpace

A language L is recognisable in polynomial time if there is a deterministic Turing machine M accepting L such that τM = Ο(nr) for some r ∈ N. The class of all languages recognisable in polynomial time is denoted by P.

A language L is recognisable in polynomial space if there is a deterministic Turing machine M accepting L such that sM = Ο(nr) for some r ∈ N. The class of all languages recognisable in polynomial space is denoted by PSpace.

A decision problem is said to be in P resp. PSpace if its language of encoded 'yes'-instances is in P resp. PSpace.

The Classes NP and NPSpace

A language L is recognisable in nondeterministic polynomial time if there is a nondeterministic Turing machine N accepting L such that ΤN = Ο(nr), for some r ∈ N. The class of all languages recognisable in nondeterministic polynomial time is denoted by NP.

A language L is recognisable in nondeterministic polynomial space if there is a nondeterministic Turing machine N accepting L such that sN = Ο(nr) for some r ∈ N. The class of all languages recognisable in nondeterministic polynomial space is denoted by NPSpace.

A decision problem is said to be in NP resp. NPSpace if its language of encoded 'yes'-instances is in NP resp. NPSpace.

Example: A problem in P

A graph G = (V, E) consists of a finite set, V, of vertices (or nodes) and a finite set, E, of edges such that each edge is an unordered pair of nodes. A path is a sequence of nodes connected by edges. A complete graph (or clique) is a graph where each two nodes are connected by an edge.

The path problem has input of a graph G and nodes s and t in G and the question does G have a path from s to t?

The theorem is that the path problem is in P, and the proof for this is the following algorithm which solves the path problem:

On input (G, s, t) where G = (V, E) is a graph with nodes s and t:

- Mark node s

- Repeat until no additional nodes are marked

- scan all edges of G

- for each edge {v, v′} such that v is marked and v′ is unmarked, mark v.

- Check if t is marked - accept, otherwise reject

The running time of this algorithm can be considered by considering each stage. Stages 1 and 3 are considered only once. The body of the loop in stage 2 is executed at most |V| times, because each time except for the last, an unmarked node is marked. The body of the loop can be executed in time linear in |E| and stages 1 and 3 need only constant time. Hence, the overall running time is Ο(|V| × |E|). In the worst case, |E| = |V|2/2, and hence in Ο(|V|3).

Example: Problems in NP

The complete subgraph (clique) problem has the input of a graph G and k ≥ 1. The question is does G have a complete subgraph with k vertices?

The Hamiltonian circuit problem takes an input of a graph G, and the question is does G have a Hamiltonian circuit (a path v0, ..., vn through all nodes of G such that v0 = vn and v1, ..., vn are pairwise distinct.

Theorem: The complete subgraph and the Hamiltonian circuit problems are in NP.

The clique problem can be solved by a nondeterministic Turing machine in polynomial time. N nondeterministically selects ("guesses") vertices v1, ..., vk in G and checks whether each pair {vi, vj}, 1 ≤ i ≤ j ≤ k is an edge in G. This checking can be done in time polynomial in |V| + k.

G can be represented as a string of vertices followed by a string of edges in the form of vertex pairs. Then, at most k2/2 vertex pairs have to be checked, which can be done in time |V|2/2 × k2/2.

Tractable Languages

A language is tractable if L ∈ P, otherwise L is intractable.

A language L is recognisable in exponential time if there is a Turing machine M accepting L such that τM = Ο(2nr) for some r ∈ N. The class of all languages recognisable in exponential time is denoted by ExpTime.

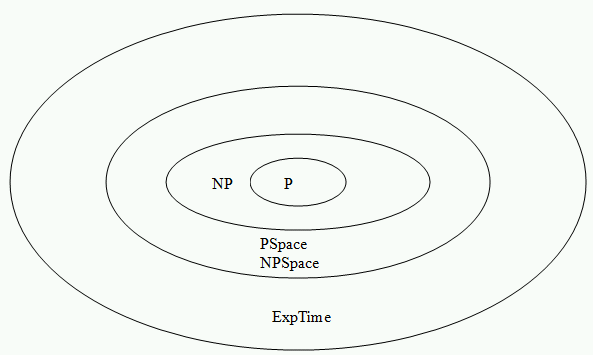

Theorem:

- P ⊆ NP ⊆ PSpace = NPSpace ⊆ ExpTime

- P ≠ ExpTime

There is an open research problem of does P = NP.

Conjectured Hierarchy of Complexity Classes

(Only P ≠ ExpTime is known, but it is not known whether the other subsets above are proper or not)

Inclusions P ⊆ NP and PSpace ⊆ NPSpace, as every deterministic Turing machine can be represented by a nondeterministic Turing machine.

Inclusions P ⊆ PSpace and NP ⊆ NPSpace, as every Turing machine (deterministic or nondeterministic) satisfies sM(n) ≤ τM(n) + 1, as we can not visit more squares than we can do transitions. Hence τM = Ο(nr) implies sM = Ο(nr).

Inclusions NPSpace ⊆ PSpace. This can be proved using Savitch's Theorem (which is nontrivial), which states that for every nondeterministic Turing machine N there is a Turing machine M such that L(M) = L(N) and sM = sN2, and a polynomial squared is still a polynomial.

Inclusions PSpace ⊆ ExpTime. For an input of length n each configuration of the Turing machine M can be written as follows: (q, a0...ai...asM(n)). For each part, there are |Q| possible states, sM(n) possible tape head positons and |Γ|sM(n) possible tape content configurations. Hence, the maximum number of configurations is |Q| × sm(n) × |Γ|sM(n) = Ο(Γ|sM.

A Turing machine computation that halts does not repeat any configurations. Hence τM = Ο(ksM for some k, and it follows our inclusion, hence PSpace ⊆ ExpTime (for k ≤ 2m we have knr ≤ (2m)nr = 2mnr = 2nm + r).

Polynomial-time Reductions

A function ƒ is polynomial-time computable if there is a Turing machine M with τM = Ο(nr) that computes ƒ.

Let L1, L2 ⊆ Σ* be languages. L1 is polynomial-time reducible to L2 if there is a polynomial-time computable total function ƒ : Σ* → Σ* such that for all x ∈ Σ*, x ∈ L1 if and only if ƒ(x) ∈ L2.

Theorem: Let L1 be polynomial-time reducible to L2. Then: L2 ∈ P implies L1 ∈ P; L2 ∈ NP implies L1 ∈ NP.

Proof

Since L2 ∈ P there is a Turing machine M2 with polynomial time complexity that decides L2. Moreover, since L1 is polynomial-time reducible to L2, there is a Turing machine M with polynomial-time complexity such that ƒM : Σ* → Σ* satisfies for all x ∈ Σ*, x ∈ L1 if and only if ƒM(x) ∈ L2.

Let M1 be the composite Turing machine MM2 which first runs M and then runs M2 on the output of M, then L(M1) = L1.

Since |ƒM(x)| can not exceed max(τM(|x|), |x|), the number of transitions of M1 is bounded by the sum of the following estimates of the seperate computations:

(a) τM1(n) ≤ τM(n) + τM2(max(τM(n), n))

Let τM = Ο(nr), then there are constants c and n0 with τM(n) ≤ c . nr ∀ n ≥ n0.

Let τM2 = Ο(nt), then there are constants c2 and n2 with τM2(n) ≤ c2 . nt ∀ n ≥ n2.

Hence τM2(n) ≤ c2.nt + d2, ∀ n ≥ 0, where d2 = {τM2(k) | k < n2}.

It follows τM2(τM(n)) ≤ c2 . (τM(n))t + d2, for all n ≥ 0, and hence τM2(τM(n)) ≤ c2 . (c . nr)t + d2, for all n ≥ 0

So, τM2(τM(n)) ≤ c2ct . nrt + d2 ∀ n ≥ 0.

Thus, by formula (a) above, τM1 = Ο(nrt), hence L1 ∈ P (r and t are constants, therefore nrt ∈ P)

Hardness

If L1 is polynomial-time reducible to L2, then L1 is no harder than L2. Equivalently, L2 is at least as hard as L1.

A language L is NP-hard if every language in NP is polynomial-time reducible to L; A NP-hard language is at least as hard to decide as every language in NP. Equivalently, no language in NP is harder to decide than any NP-hard language.

A language L is NP-complete if it is NP-hard and belongs to NP; a NP-complete language is a "hardest" language in NP. Corollary, for every NP-complete language L, L ∈ P if and only if P = NP.

Assuming P ≠ NP:

The Satisifability Problem

Consider boolean expressions built from variables xi and connectives ^, ∨ and ¬. A literal is either a variable xi, or a negated variable ¬xi. An expression C1 ^ C2 ^ ... ^ Cn is in conjunctive normal form (CNF) if C1, ..., Cn are disjunction of literals.

An expression C is satisfiable if there is an assignment of truth values to variables that make C true.

e.g., C = (x1 ∨ x3) ^ (¬x1 ∨ x2 ∨ x4) ^ ¬x2 ^ ¬x4. In this case, C is satisfiable by the assignment α = {x1, x2, x4 → false, x3 → true}

The satisfiability problem takes input of a boolean expression C in CNF, and the question is "Is C satisfiable?".

Theorem: The satisfiability problem is NP-complete. This was proved by S. A. Cook in 1971 was was the first problem to be shown to be NP-complete.

To prove a problem is NP-complete, any NP-complete problem should be reducible to it. e.g., a polynomial-time reduction of the satisfiability problem to the clique problem. What we need is a function ƒ : x → (Gx, kx), where x if a CNF expression and (Gx, kx) is a pair depending on x, such that x is satisfiable if and only if Gx has a clique with kx vertices.

Let x = ^ci = 1∨dij = 1ai, j, where ^ and ∨ are conjuncts and each ai, j is a literal.

Observation: Pick one literal from each conjunct and connect each pair of picked literals that is not complimentary (e.g., x and ¬x are complimentary). If this yields a complete graph, then x is satisfiable. Vice versa, if x is satisfiable, then a complete subgraph can be constructed in this way.

Idea: Choose Gx as the graph consisting of all occurences of literals and all connections between literals that are in different conjuncts and not complimentary.

Define Gx = (Vx, Ex) by Vx = {(i, j) | 1 ≤ i ≤ c ^ 1 ≤ j ≤ di} and Ex = {((i, j), (l, m)) | i ≠ l ^ ai, j ¬≡ ¬al, m}, and let kx = c.

By construction of Gx: Two vertices are linked if and only if their literals are in different conjuncts and there is a truth assignment making both literals true. Next, we show that ƒ is a reduction from the satisfiability problem to the clique problem.

"Only if": Let x be satisfiable. Then there is a truth assignment α such that for each i, there is some literal ai, ji with α(ai, ji) = true. Hence, by construction of Ex, the vertices (1, j1), (2, j2), ..., (kx, jkx) forms a clique in Gx. Note that ai, ji ¬≡ ¬ai, ji, because α(ai, ji) = true = α(ai, ji).

"If": Let Gx have a clique with kx many vertices. Then, by definition of Ex, the literals of these vertices must be pairwise non-complimentary. Hence, there is a truth assignemtn Θ making all of these literals true. Moreover, by the definition of Ex, these kx literals are in kx different conjuncts. So α makes each of the kx = c conjuncts true. Thus, x is satisfiable.